Michael Dennis

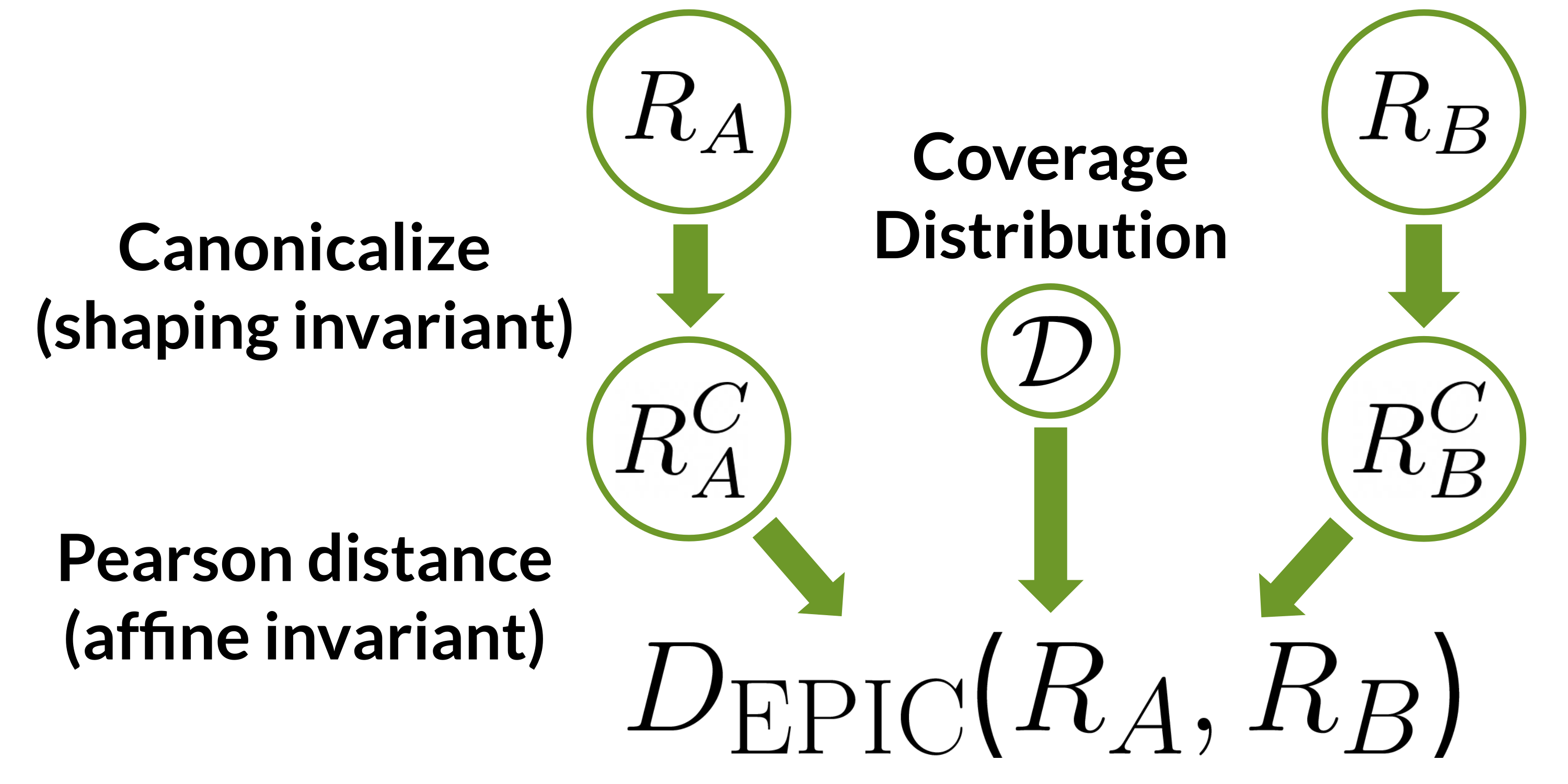

Real world environments are complicated, too complicated to be completely specified in any simulation or model. Despite this, we need our agents to be able to solve our real world problems and be able to manage real world complexity. I am interested with this interesection between Problem Specification and Open-Ended Complexity -- studying the boundary between what complexity must be described, and what can be artificially generated.To this end we have formalized the problem of Unsupervised Environment Design (UED), which aims to build complex and challenging environments automatically to promote efficient learning and transfer . This framework has deep connections to decision theory, which allows us to make guarantees about how the resulting policies would perform in human-designed environments, without having ever trained on them.

I'm currently a Research Scientist on Google Deepmind's Openendedness team. I was previously a Ph.D. Student at the Center for Human Compatible AI (CHAI) advised by Stuart Russell. Prior to research in AI I conducted research on computer science theory and computational geometry.